NLP的文本预处理实例-IMDb电影评论集

- 蒙面西红柿

- 2,731

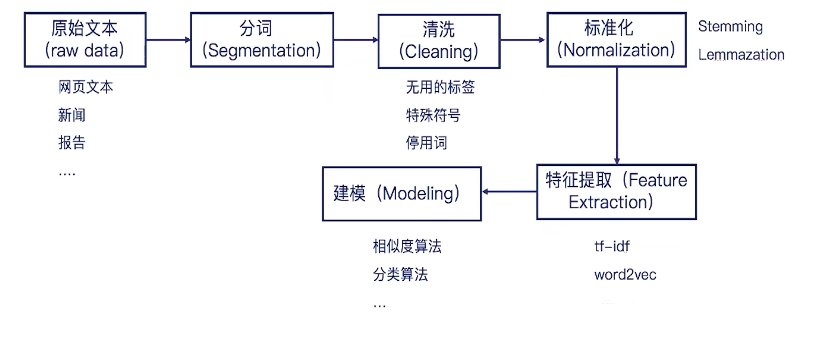

自然语言处理NLP(nature language processing),顾名思义,就是使用计算机对语言文字进行处理的相关技术以及应用。在对文本做数据分析时,我们一大半的时间都会花在文本预处理上。本文结合IMDb数据集,对英文文本常用的NLP的文本预处技术做一个总结。

1. 收集数据

文本数据的获取一般有两个方法:

1. 别人已经做好的数据集,或则第三方语料库,比如wiki。这样可以省去很多麻烦。

2. 自己从网上爬取数据。但很多情况所研究的是面向某种特定的领域,这些开放语料库经常无法满足我们的需求。我们就需要用爬虫去爬取想要的信息了。可以使用如beautifulsoup、scrapy等框架编写出自己需要的爬虫。

本项目使用的是Kaggle的IMDB数据集,包含49582条电影评论的.csv文件。文件内有两列,评论与该评论对应的情感值(positive/negative)

将文件下载以后,使用pandas的read_csv方法读取此数据集

dataset = pd.read_csv("./data/IMDB Dataset.csv")

dataset.info()使用info查看此数据集的结构,输出如下:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 50000 entries, 0 to 49999

Data columns (total 2 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 review 50000 non-null object

1 sentiment 50000 non-null object

dtypes: object(2)

memory usage: 781.4+ KB2. 清洗

2.1 去除HTML Tags

此数据集是从网页爬取获得,因此爬取的评论中有很多无用的html符号。接下来用一条评论举例:

sample = dataset['review'].loc[1]

sample'A wonderful little production. <br /><br />The filming technique is very unassuming- very old-time-BBC fashion and gives a comforting, and sometimes discomforting, sense of realism to the entire piece. <br /><br />The actors are extremely well chosen- Michael Sheen not only "has got all the polari" but he has all the voices down pat too! You can truly see the seamless editing guided by the references to Williams\' diary entries, not only is it well worth the watching but it is a terrificly written and performed piece. A masterful production about one of the great master\'s of comedy and his life. <br /><br />The realism really comes home with the little things: the fantasy of the guard which, rather than use the traditional \'dream\' techniques remains solid then disappears. It plays on our knowledge and our senses, particularly with the scenes concerning Orton and Halliwell and the sets (particularly of their flat with Halliwell\'s murals decorating every surface) are terribly well done.'可以看到有很多无用的<br>符号。

使用正则表达式去掉这些符号:

import re

cleanr = re.compile('<.*?>')

clean_reviews1 = re.sub(cleanr, '', reviews)2.2 去除无用符号

在如上的输出中,除了<br>等html tags以外还有 \ 等符号。在别的评论中,还有 | 符号。因此这一步的目的是去除所有的无用符号只保留字母。

clean_reviews2 = re.sub('[^a-zA-Z]', ' ', clean_reviews1)2.3 将所有字母转化为小写

clean_reviews3 = clean_reviews2.lower()2.4 分词

分词以后可以为后续的去除停用词和词干提取做准备

clean_reviews4 = clean_reviews3.split()2.5 去除停用词

停用词就是句子没什么必要的单词,去掉他们以后对理解整个句子的语义没有影响。文本中,会存在大量的虚词、代词或者没有特定含义的动词、名词,这些词语对文本分析起不到任何的帮助,我们往往希望能去掉这些“停用词”。

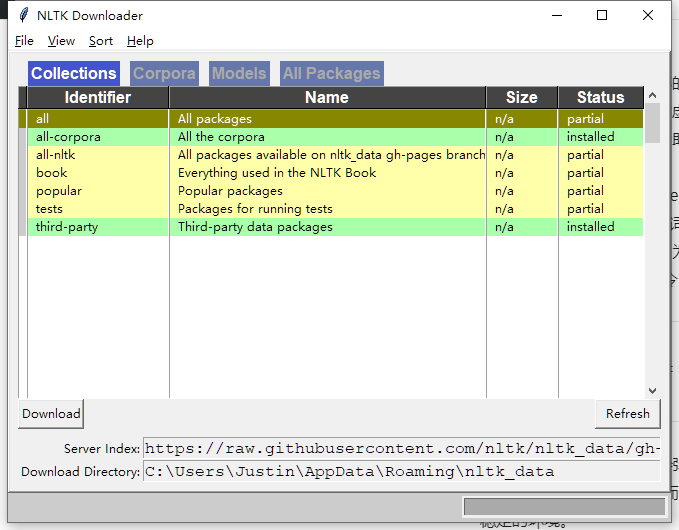

在英文中,例如,”a”,”the”,“to”,“their”等冠词,借此,代词….. 我们可以直接用nltk中提供的英文停用词表。首先,”pip install nltk”安装nltk。当你完成这一步时,其实是还不够的。因为NLTK是由许多许多的包来构成的,此时运行Python,并输入下面的指令。

import nltk

from nltk.tokenize import word_tokenize

nltk.download()然后,Python Launcher会弹出下面这个界面,你可以选择安装所有的Packages,以免去日后一而再、再而三的进行安装,也为你的后续开发提供一个稳定的环境。

我们可以运行下面的代码,看看英文的停用词库。

from nltk.corpus import stopwords

stop = set(stopwords.words('english'))

print(stop){'same', "she's", 'and', 'some', 'does', 'did', 'too', 'below', 'with', 'further', "didn't", 'ain', 'not', 'there', 'was', 'why', 'm', 'shan', 'about', 'each', 'own', 'yours', 'their', "don't", 'didn', 'isn', 'hasn', "needn't", 'here', 'themselves', "isn't", 'what', 'off', 'when', 'such', 'she', 'itself', 'wouldn', 'who', 'during', 'over', 'you', 'before', 'we', 'be', 'it', 'the', 'down', 'as', 'by', 'which', 'don', 'can', 'between', 'other', 'my', 'all', 'then', 'how', 'do', 'no', 'until', 'doesn', 'have', 'on', 'where', 'our', "it's", 'needn', 'both', 'am', 'few', "you're", 'for', "you've", "couldn't", 'is', 'having', 'are', 'into', 'him', 'couldn', 'hadn', 'against', 'through', 'they', 'so', 'those', "shouldn't", "wasn't", 'yourselves', 'while', 'mustn', 'weren', 'i', 'now', 'being', 's', 'from', 'these', 'any', "haven't", 'in', 'an', 'if', 'nor', 're', 'this', "aren't", 'mightn', 'very', 'up', "that'll", 'just', 'will', 'o', 'more', 'he', 'but', "you'd", 'because', 'at', 'ma', "mustn't", 'of', 'out', "wouldn't", 'above', 'to', 'whom', 'myself', 'were', 'doing', "you'll", 'yourself', "should've", "weren't", 'won', 'aren', "shan't", 'her', 'wasn', 'or', 'your', 'hers', "hadn't", 'under', 'had', 'should', 'haven', 'shouldn', 'than', 'that', 'y', 'only', "doesn't", 'me', 'herself', 'd', 'a', 'again', "hasn't", 'his', 'ourselves', "mightn't", 'himself', 'after', 'theirs', 'its', 'them', 'been', "won't", 've', 'has', 'once', 't', 'most', 'll', 'ours'}使用如下代码去除目标文本中的停用词:

#import stopwords

stop = set(stopwords.words('english'))

#remove stopwords

clean_reviews5 = [word for word in clean_reviews4 if not word in set(stopwords.words('english'))]3. 词干提取

词干提取(stemming)和词型还原(lemmatization)是英文文本预处理的特色。两者其实有共同点,即都是要找到词的原始形式。只不过词干提取(stemming)会更加激进一点,它在寻找词干的时候可以会得到不是词的词干。比如”leaves”的词干可能得到的是”leav”, 并不是一个词。而词形还原则保守一些,它一般只对能够还原成一个正确的词的词进行处理。nltk中提供了很多方法,wordnet的方式比较好用,不会把单词过分精简。本项目选择词形还原。

from nltk.stem import WordNetLemmatizer

lem = WordNetLemmatizer()

clean_reviews6 = [lem.lemmatize(word) for word in clean_reviews5]最后将处理完的单词还原成一个句子:

clean_reviews6 = ' '.join(clean_reviews6)对比处理之前的文本与处理之后的文本:

处理之前的文本:

'A wonderful little production. <br /><br />The filming technique is very unassuming- very old-time-BBC fashion and gives a comforting, and sometimes discomforting, sense of realism to the entire piece. <br /><br />The actors are extremely well chosen- Michael Sheen not only "has got all the polari" but he has all the voices down pat too! You can truly see the seamless editing guided by the references to Williams\' diary entries, not only is it well worth the watching but it is a terrificly written and performed piece. A masterful production about one of the great master\'s of comedy and his life. <br /><br />The realism really comes home with the little things: the fantasy of the guard which, rather than use the traditional \'dream\' techniques remains solid then disappears. It plays on our knowledge and our senses, particularly with the scenes concerning Orton and Halliwell and the sets (particularly of their flat with Halliwell\'s murals decorating every surface) are terribly well done.'处理之后的文本:

['wonderful little production filming technique unassuming old time bbc fashion give comforting sometimes discomforting sense realism entire piece actor extremely well chosen michael sheen got polari voice pat truly see seamless editing guided reference williams diary entry well worth watching terrificly written performed piece masterful production one great master comedy life realism really come home little thing fantasy guard rather use traditional dream technique remains solid disappears play knowledge sens particularly scene concerning orton halliwell set particularly flat halliwell mural decorating every surface terribly well done']4. 特征提取

数据处理到这里,基本上是干净的文本了,现在可以调用sklearn来对我们的文本特征进行处理了。常用的方法有Tf-idf, Ngram等。

4.1 Ngram

N-gram模型是一种语言模型(Language Model),语言模型是一个基于概率的判别模型,它的输入是一句话(单词的顺序序列),输出是这句话的概率,即这些单词的联合概率(joint probability)。N-gram本身也指一个由N个单词组成的集合,各单词具有先后顺序,且不要求单词之间互不相同。常用的有 Bi-gram (N=2N=2) 和 Tri-gram (N=3N=3),一般已经够用了。例如,”I love deep learning”,可以分解的 Bi-gram 和 Tri-gram :

Bi-gram : {I, love}, {love, deep}, {love, deep}, {deep, learning}

Tri-gram : {I, love, deep}, {love, deep, learning}

本项目使用如下代码应用Ngram:

#N-gram

from sklearn.feature_extraction.text import CountVectorizer

vector = CountVectorizer(min_df=1, ngram_range=(2,2))

X = vector.fit_transform(clean_sample)

print(vector.vocabulary_ )

print(X)

df1 = pd.DataFrame(X.toarray(), columns=vector.get_feature_names())

df1.head()如下是输出的一部分,可以看出Ngram的原理:

{'wonderful little': 82, 'little production': 31, 'production filming': 48, 'filming technique': 19, 'technique unassuming': 67, 'unassuming old': 74, 'old time': 37, 'time bbc': 71, 'bbc fashion': 1, 'fashion give': 18, 'give comforting': 21, 'comforting sometimes': 5, 'sometimes discomforting': 64, 'discomforting sense': 10, 'sense realism': 60, 'realism entire': 51, 'entire piece': 13, 'piece actor': 44, 'actor extremely': 0, 'extremely well': 16, 'well chosen': 78, 'chosen michael': 2, 'michael sheen': 35, 'sheen got': 62, 'got polari': 22, 'polari voice': 47, 'voice pat': 76, 'pat truly': 42, 'truly see': 73, 'see seamless': 58, 'seamless editing': 57, 'editing guided': 12, 'guided reference': 25, 'reference williams': 54, 'williams diary': 81, 'diary entry': 8, 'entry well': 14, 'well worth': 80, 'worth watching': 83, 'watching terrificly': 77, 'terrificly written': 69, 'written performed': 84, 'performed piece': 43, 'piece masterful': 45, 'masterful production': 34, 'production one': 49, 'one great': 38, 'great master': 23, 'master comedy': 33, 'comedy life': 4, 'life realism': 30, 'realism really': 52, 'really come': 53, 'come home': 3, 'home little': 28, 'little thing': 32, 'thing fantasy': 70, 'fantasy guard': 17, 'guard rather': 24, 'rather use': 50, 'use traditional': 75, 'traditional dream': 72, 'dream technique': 11, 'technique remains': 66, 'remains solid': 55, 'solid disappears': 63, 'disappears play': 9, 'play knowledge': 46, 'knowledge sens': 29, 'sens particularly': 59, 'particularly scene': 41, 'scene concerning': 56, 'concerning orton': 6, 'orton halliwell': 39, 'halliwell set': 27, 'set particularly': 61, 'particularly flat': 40, 'flat halliwell': 20, 'halliwell mural': 26, 'mural decorating': 36, 'decorating every': 7, 'every surface': 15, 'surface terribly': 65, 'terribly well': 68, 'well done': 79}

(0, 82) 1

(0, 31) 1

(0, 48) 1

(0, 19) 1

(0, 67) 1

(0, 74) 1

(0, 37) 1

(0, 71) 1

(0, 1) 1

(0, 18) 1

(0, 21) 1

(0, 5) 1

...4.2 Tf-idf(Term Frequency-Inverse Document Frequency)

该模型基于词频,将文本转换成向量,而不考虑词序。假设现在有N篇文档,在其中一篇文档D中,词汇x的TF、IDF、TF-IDF定义如下:

1.Term Frequency(TF(x)):指词x在当前文本D中的词频

2.Inverse Document Frequency(IDF): N代表语料库中文本的总数,而N(x)代表语料库中包含词x的文本总数

本项目也尝试了TF-idf特征提取方法:

#Tf-idf

from sklearn.feature_extraction.text import TfidfVectorizer

vector = TfidfVectorizer(ngram_range=(2, 2))

train_vector = vector.fit_transform(df_train)

test_vector = vector.transform(df_test)从结果来看,基于线性SVC的情况下,Ngram的训练结果在0.898左右,Tf-idf为0.879。

文章评论

学习了,谢谢!